How Google is powering its next-generation AI

Teaching software to be smart

Get all the latest news, reviews, deals and buying guides on gorgeous tech, home and active products from the T3 experts

You are now subscribed

Your newsletter sign-up was successful

If you paid any attention to Google's big developer conference earlier this year then you'll know artificial intelligence is about to get big - really big. It's already powering most of Google's apps, one way or another, and the other giants in tech are scrambling to keep up.

So what's all the fuss about? Here we're going to dig deeper into some of the AI announcements Google shared at I/O 2017, and explain how they're going to change the way you interact with your gadgets - from your smartphone to your music speakers.

The basics of AI

In broad terms artificial intelligence (usually) refers to a piece of software or a machine that simulates smart, human-like intelligence - even if it's just a hollow robot being operated by a person behind a curtain, pretending to respond to your commands, that's still a kind of AI.

Within that you've got all kinds of branches, categories and approaches. As you may have noticed, different types of AI are better at different tasks: the AI responsible for beating humans at board games isn't necessarily going to be any good at holding up a conversation across an instant messenger app, for instance.

The type of AI Google is most interested in is known as machine learning, where computers learn for themselves based on huge banks of sample data. That could be learning what a picture of a dog looks like or learning how to drive a car, but whatever the end goal, there are two steps: training and inference.

During training, the system is fed with as much sample information as possible - so maybe millions of photos of dogs. The smart algorithms inside the AI then try and spot patterns in the images that suggest a dog, knowledge that's then applied in the inference stage. The end result is an app that recognises your pets in pictures.

AI in Google's apps and engines

Artificial intelligence is already all over Google's apps, whether it's in spotting which email messages are likely to be spam in Gmail, or making recommendations about what you'd like to listen to next in Google Play Music. Any decision not made by a human could be construed as AI of some kind.

Get all the latest news, reviews, deals and buying guides on gorgeous tech, home and active products from the T3 experts

Another example is voice commands in the Google Assistant. When you ask it to do something, the sound waves created by your voice are compared to the knowledge Google's systems have gained from analysing huge numbers of other audio snippets, and the app then (hopefully) understands what you're saying.

Translating text from one language into another, working out which ads best match which sets of search results, all of these jobs that apps and computers do can be enhanced by AI. It's even popped up in the Smart Reply feature recently added to Gmail - short snippets of text you might want to use in response, based on an (anonymous) analysis of countless other emails.

And Google isn't slowing down, either. The company is busy working hard to improve its efforts in AI, as we saw at I/O earlier in the year - that means more efficient algorithms, a better end experience for users, and even AI that can teach itself to be better.

Google's latest AI push

We've talked about machine learning but there's a branch of machine learning that Google engineers are specifically interested in called deep learning - that's where AI systems try and mimic the human brain to deal with vast amounts of information.

It's a machine learning technique made possible by the massive amounts of computational power now available to us. In the case of the dog pictures example we mentioned above, it means more layers of analysis, more subtasks making up the main task, and the system itself taking on more of the burden of working out the right answer (so figuring out what makes a dog picture a dog picture, rather than being told by programmers, in our earlier example).

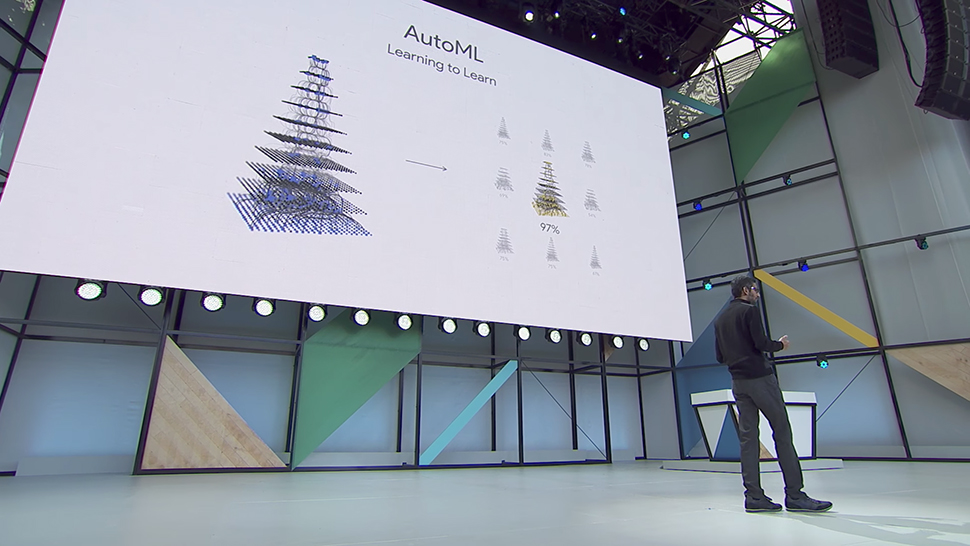

Deep learning means machine learning that relies less on code and instructions written by humans, and deep learning systems are known as neural networks, named after the neurons in the human brain. On stage at Google I/O 2017 we saw a new system called AutoML, which is essentially AI teaching itself - whereas in the past small teams of scientists have had to choose the best coding route to produce the most effective neural nets, now computers can start to do it for themselves.

On its servers, Google has an army of processing units called Cloud TPUs (Tensor Processing Units) designed to handle all this deep thinking. In fact, Google makes some of its AI available to all via the TensorFlow portal - developers can plug the smart algorithms and machine learning power into their own apps, if they know how to harness it. In return, Google gets the best AI minds and apps in the business using its own services.

Google's AI future

There was no doubt during the I/O 2017 keynote that Google thinks AI will be the most important area of technology for the foreseeable future - more important, even, than how many megapixels it's going to pack into the camera of the Pixel 2 smartphone.

You can therefore expect to hear a lot more about Google and artificial intelligence in the future, from smart, automatic features in Gmail to map directions that know where you're going before you do. The good news is that it seems keen to bring everyone else along for the ride too, making its platforms and services available for others to make use of, and improving the level of AI across the board.

One of the biggest advances you'll see on your phone is the quality of the digital assistant apps, which are set to take on a more important role in the future: choosing the apps you see, the info you need, and much more. We've also been treated to a glimpse of an app called Google Lens, a smart camera add-on that means your phone will know what it's looking at and be able to make decisions at all times.

The AI systems being developed by Google go way beyond our own consumer gadgets and services too - they're being used in the medical profession as well, where deep learning systems can spot the spread of certain diseases much earlier than doctors can, because they've got so much more data to refer to.

Dave has over 20 years' experience in the tech journalism industry, covering hardware and software across mobile, computing, smart home, home entertainment, wearables, gaming and the web – you can find his writing online, in print, and even in the occasional scientific paper, across major tech titles like T3, TechRadar, Gizmodo and Wired. Outside of work, he enjoys long walks in the countryside, skiing down mountains, watching football matches (as long as his team is winning) and keeping up with the latest movies.