T3 Interview: BAE Systems' head of strategic tech talks next-generation military hardware!

We get a glimpse into the future of AR, smart materials, AV, electrification, AI and more

Get all the latest news, reviews, deals and buying guides on gorgeous tech, home and active products from the T3 experts

You are now subscribed

Your newsletter sign-up was successful

Andy Wright - Director of Strategic Technology, BAE Systems

As BAE Systems is at the forefront of numerous technological fields, including many areas that are currently seeing great uplift in the commercial sector such as autonomous vehicles, virtual reality, augmented reality, electrification, smart materials, AI and more, T3 decided recently to call up and chat with BAE Systems' Director of Strategic Technology, Andy Wright, about some of its most impressive and forward thinking work.

What follows is an enlightening snapshot into the work of one of the UK's most celebrated defence, security and aerospace companies.

T3: First up Andy, can you talk briefly about who you are and your background in the industry?

Andy Wright: So I'm Director of Strategic Technology at BAE Systems in the UK and my role across the whole of the UK is really understanding the plans for technology, understanding what technology we need for the business, both in what we need to do now and also for the future, and it also includes the relationships that we have with different people who supply technology to the business, be that the universities or small businesses and enterprises. So it is very much a co-coordinating role and one that has a visionary component in thinking about what technology is going to impact the future of the business.

T3: What's your position on AR tech?

AW: AR has been around for quite a while and the company has been very active in this area for quite a while. I think that now with the advance of screens and the ability to be able to embed yourself not just from a virtual reality point of view but also from an augmented point of view, with glasses that let you lay on top a computer world on top of the real world, it is becoming very, very important.

We use AR technology so people can immerse themselves in a large design and visualise the build

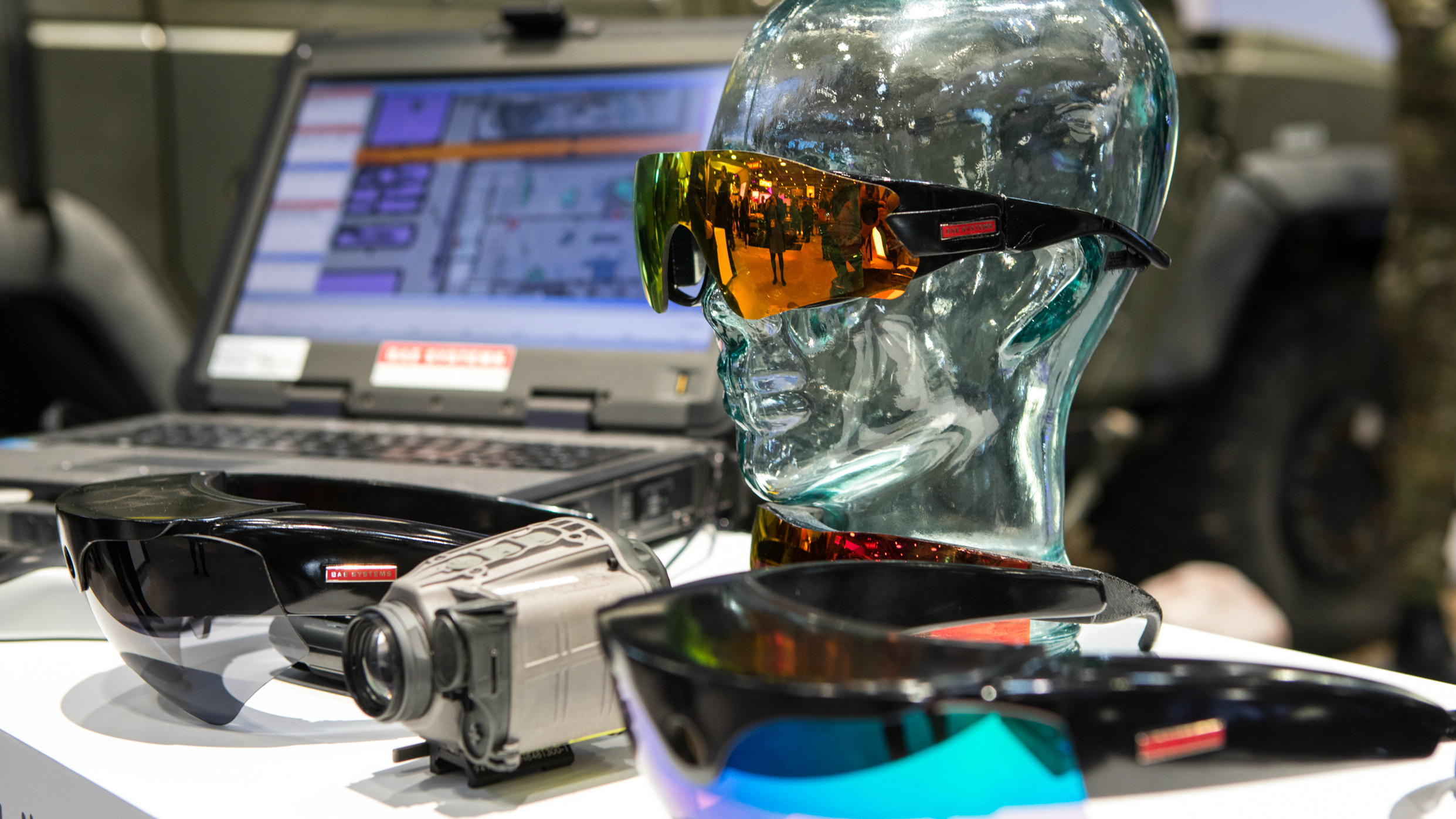

From our perspective you really need to focus on two things - firstly that within the business we've produced a lot of really important equipment, such as the Striker II helmet, which is a helmet we've made for use in the Typhoon aircraft that has the whole of the head-up display on the inside of the visor. This allows the pilot to continue to look outside the cockpit and still have the information on the visor coming up front. The Striker II also has got an integrated infrared camera so you can overlay infrared imagery onto that screen, and it also allows us to know where the pilot is looking, even if that is through the bottom of the cockpit.

So within the business there's some really good areas integrating the technology that go beyond what you can buy off the shelf right now. And we've been looking now into taking that sort of technology into AR glasses for the ground soldiers of the future - to provide some wearable glasses that can give a soldier an infrared display and tactical displays so they know who is where and what's going on.

Also, we do a lot of work around that area regarding making our head-up displays very light weight, with a lot of compact computing. This is all coming out of our Rochester part of the business.

Get all the latest news, reviews, deals and buying guides on gorgeous tech, home and active products from the T3 experts

That is capability and technology that we're not only building ourselves, but also that we are working with others on, such as Williams F1

And while that is about the kit and technology, secondly we also do a lot of work in thinking about how we use AR. We look at using this for training our people, such as our apprentices in our Samlesbury centre where we use VR and AR to help them understand the plant and the machinery, as well as the aircraft they are going to work on.

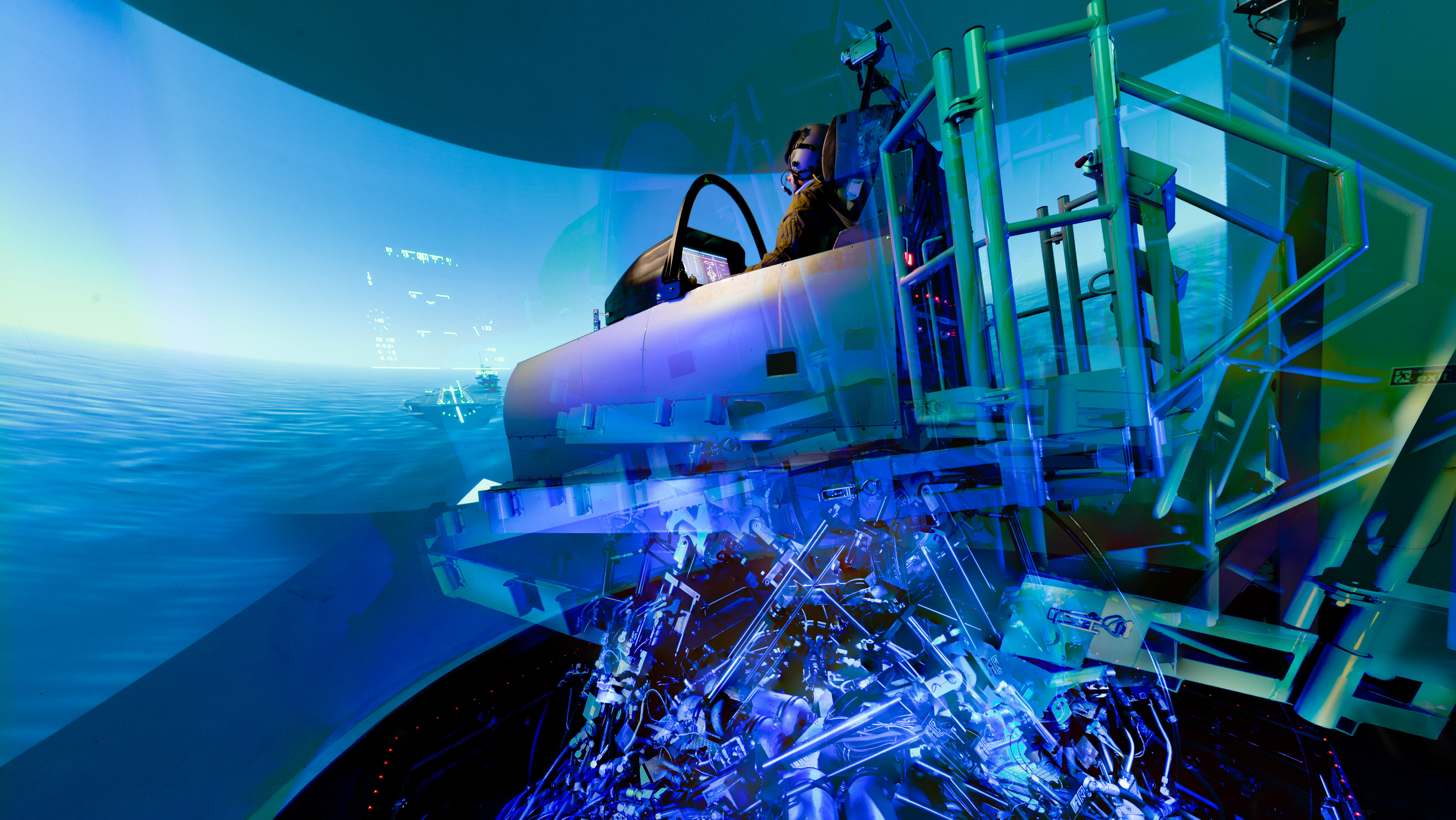

We also use the technology in our designing, so people can immerse themselves in a large design and understand where various components go and visualise the build, and also in advanced training. In advanced training we are incorporating AR systems in the process of understanding what the future cockpit would look like. We can bring pilots into that environment and ask them to comment and feedback to us, we can bring engineers into that environment and start the design process. That is capability and technology that we're not only building ourselves, but also that we are working with others on, such as Williams F1, who are providing some of that training capability.

So yes, without doubt both AR and VR are going to be there in the future. We live in a world where digesting ever-increasing large amounts of data and making sense of that, and then providing that to a user such as an engineer, pilot or soldier so they can make the right decision at the right time, is crucial. And technology such as AR helps us do that.

T3: How is BAE Systems integrating smart materials into its products and projects?

AW: We do quite a lot with smart materials. Revolutions in materials has come out of our business for quite a long time - think about carbon fibre and various alloys for example - and we are always looking at potential materials that will be stronger, lighter, more durable and more easy to machine than what we have today.

So yes we are looking at materials that are smart and can sense and understand their environment, and one of the novel areas we are currently looking at is in partnership with quite a small business on intelligent fabrics. So we've got something called Broadsword, which is a fabric that allows you to pass information, data and power through it.

Now if you were to do that at the moment you'd embed cables into the fabric, and as you actually wear the fabric the cables would break and it also would not be particularly robust. With Broadsword we use a novel e-textile material that allows us to weave the means of transmitting those things in the actual fabric material itself, removing the cables. This novel material came out of company called Intelligent Textiles Limited and when we started working with them they were actually producing it on a hand loom, and we've now worked with them to manufacture it on a far bigger scale.

So a soldier can wear a vest, and one the problems a soldier has now is that a lot of their kit is powered by battery, and so they have to carry lots of different batteries. But with the e-textile we can have one battery actually in the vest that can be used to plug in all the different kit the soldier wants to power or charge. The smart material vest has a kind of USB connector type plug and all the power and data goes through the fabric itself. It's quite novel and revolutionary.

T3: Momentum with autonomous tech is gathering pace right now, as too with electrification - how is BAE developing and introducing this technology?

AW: Well I think this is another area of technology that is starting to come of age. We've been looking at autonomous vehicles now for decades so I think to understand the shift you need to look at what has happened. And what has happened is that we've got the communications, computing power and sensors to drive the algorithms that allow a machine to understand the environment, work out where they are, and route themselves.

For us at BAE, as I said, autonomy is nothing new. If I think in the air arena with the Typhoon it is able to fly itself while the pilot for instance is providing command instruction, such as where to go or what targets to find and how to engage them. That's why on a modern Typhoon aircraft there is only one pilot, where a Lancaster Bomber may have had a crew of five to seven people. Our Taranis unmanned combat aircraft system is worth noting too in terms of our work in autonomy.

And we see autonomous tech in the commercial automotive industry don't we. You see lane following or adaptive cruise control where you are able to sense the car in front and moderate your speed, or advise the driver that they are going over a white line and bringing that to bear.

Within our environment we're looking at how do we use that autonomy in other domains. So, for instance, can we use the technology in the maritime domain. Last year, for example, we ran a small autonomous boat in a program called Unmanned Warrior which was a UK MOD program to demonstrate the ability to integrate unmanned boats into the command system. We fielded two systems into that, the first called Jekyll which is a large rigid inflatable. On that inflatable we positioned a number of different sensors including electro-optic cameras, radar to detect obstacles and objects, and GPS to provide the ability to work out where it was, and we modded onto that a smart brain to process the command instructions.

And with the Jekyll system you can either drive it yourself via remote control or it can operate autonomously. It can go from A to B, you can launch drones off it, it can go out to another ship and provide surveillance information to come back, or you can use that for instance to coordinate with a boarding party. So you could have one boat with marines on that is going to board a possible pirate vessel and send Jekyll round the back of the boat with its camera to work out, say, whether the people on board are throwing contraband overboard and then relay that information back.

So what this technology really lets us do is understand not just how it works but how we would want to use it in the future.

I don't think AI is going to control our lives, I think it is going to be a real enabler

What is also important is that a lot of people talk about autonomy in terms of the different levels we are going to have, and I still think we are quite a long way away from what is known as Level 5 autonomy. Level 5 is autonomous vehicles that can operate in any environment. I think generally we are quite a long way away from that. But one of the key things we are interested in is how we will actually use these autonomous vehicles within the environment they need to work in. How do you embed the appropriate command and control, and how do these AV systems fit into our command and control systems. So any battlefield that we've got has a commander in charge and so that commander will have non-autonomous assets, such as people and planes for example, and autonomous assets - so how we bring that together is very important.

Which brings me onto another thing we demonstrated in Unmanned Warrior, a command and control system called ACER, which we worked on with ASV Ltd with support from suppliers including Deep Vision Inc and Chess Dynamics Ltd. ACER allows us to command and control the boats to understand where we actually want them to go and what we want them to do. Basically, how we want the AV to work. And understanding how you do that when you've got lots of different vehicles and assets within a military environment, which is often very complicated and we're never quite sure what is going on, is really quite important.

T3: Finally, what piece of recent commercial tech has impressed you personally?

AW: Apart from my iPhone! Well, really the thing from my point of view that always intrigues me is if I asked you what technology we will be using in say five years time it is really hard to tell. As we've seen with things like AR and VR, with soldiers wearing those sorts of glasses, we can imagine something similar in the future with people in general wearing much more technology than they do today. But I think one of the things that is really going to drive advancement going forward is AI. I don't think it is going to control our lives, I think it is going to be a real enabler. I think it is going to allow us to do things that in the past we've found quite difficult to do, such as getting various systems to work seamlessly with each other, and perhaps even provide benefits to people who are partially-sighted or hard of hearing through future technologies.

AI is also going to help us find the information we need more easily in the deluge we get today, which is a very modern day problem. In the past you never had enough information, and now we've got far too much - so how we make sense of it is going to be really important.

Rob has been writing about computing, gaming, mobile, home entertainment technology, toys (specifically Lego and board games), smart home and more for over 15 years. As the editor of PC Gamer, and former Deputy Editor for T3.com, you can find Rob's work in magazines, bookazines and online, as well as on podcasts and videos, too. Outside of his work Rob is passionate about motorbikes, skiing/snowboarding and team sports, with football and cricket his two favourites.